x = range(2009,2019)

data = pd.read_excel("income.xlsx")

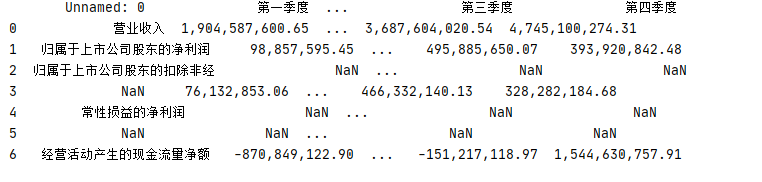

print(data)

data = data.T

data = data.rename(columns={2:"002088"})

print(data)

data['002088'].plot()

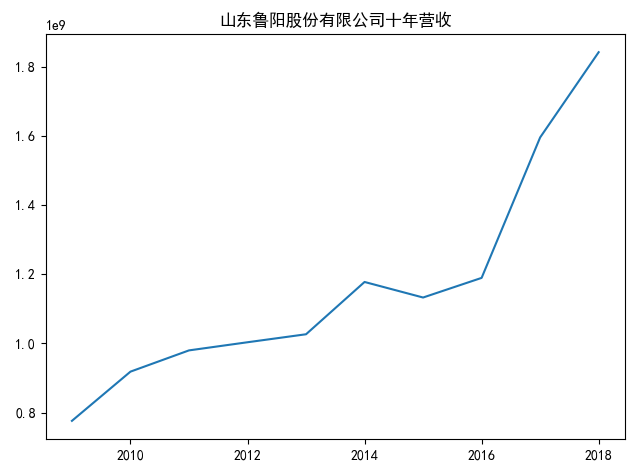

plt.title("山东鲁阳股份有限公司十年营收")

plt.show()

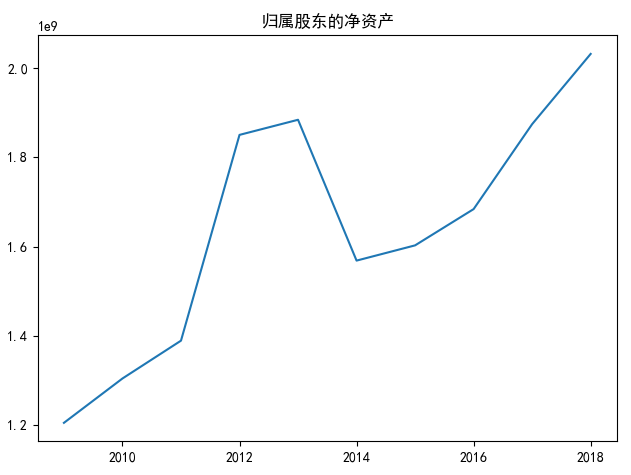

由上方两张图可以看出,山东鲁阳股份有限公司在上市的10年中,营业收入和归属于上市公司股东的净利润随时间整体呈上升趋势,但是在2014年该公司的营业收入出现下降,归属于上市公司股东的净利润也出现了下降。

import matplotlib.pyplot as plt

import pandas as pd

plt.rcParams['font.sans-serif'] = ['SimHei'] # 用来正常显示中文标签

x = range(2009,2019)

data = pd.read_excel("income.xlsx")

print(data)

data = data.T

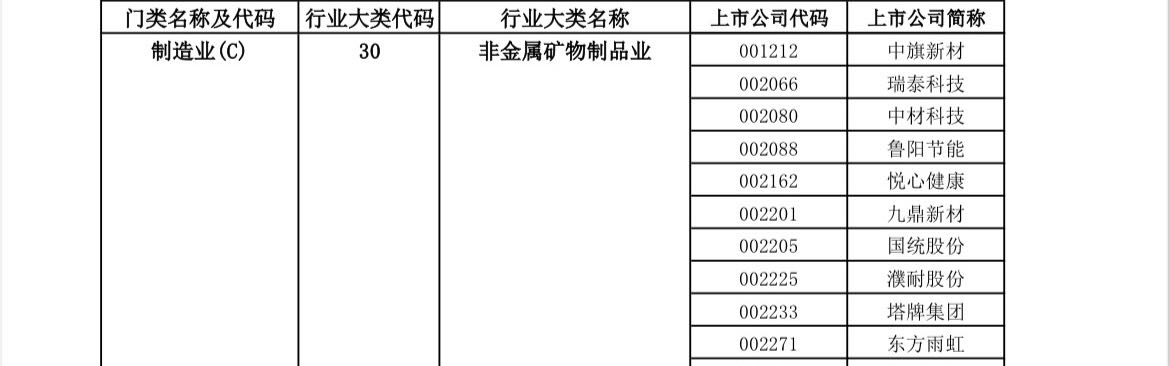

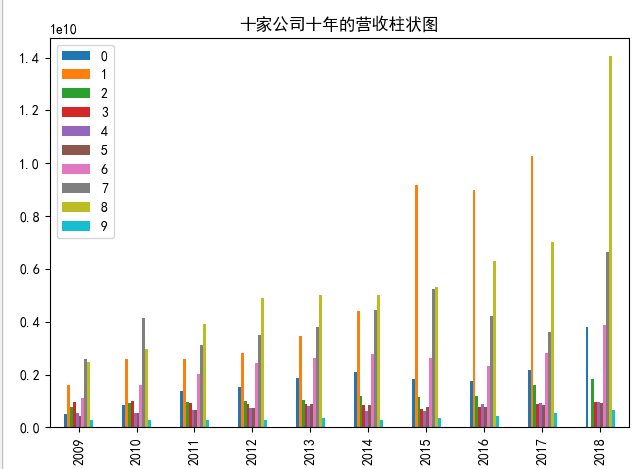

因为选取的十家公司找不到近几年的年报,所以选取了2009-2018年的年报,再对十家公司十年的营业收入进行绘图。经观察,发现十家公司中有两家营业收入显著上升,其他大部分公司保持稳定或逐步上升趋势。

import requests,json,os,time,re

def read_l(txt):

line_l = [line.strip() for line in open(txt, encoding='UTF-8').readlines()]

return line_l

def downloadpdf(pdf_url, filename):

pdf = requests.get(pdf_url)

with open(filename, 'wb') as f:

f.write(pdf.content)

def find_text(text,l):

for i in l:

if i in text:

r = True

break

else:

r = False

return r

def rename(file_name,firm_id):

global pattern,s_l

year = re.findall(pattern,file_name)[0]

for s in s_l:

if s in file_name:

season = str(s_l.index(s)+1)

break

else:

pass

new_name = f'{firm_id}_{year}_{season}.pdf'

return new_name

pattern = '[0-9]{4}'

s_l = ['第一季度','半年度','第三季度','年年度']

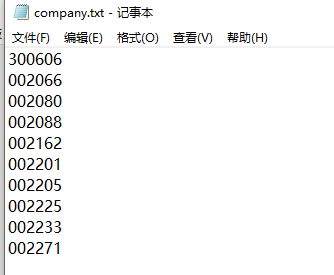

txt = r'D:\crawler_SZSE-main\not_metal\company.txt'

firm_ids = read_l(txt)

date = ["2010-12-31", "2023-5-29"]

path = r'D:\crawler_SZSE-main\not_metal\nMteal'

l = ['摘要','取消','正文']

#url

url = 'http://www.szse.cn/api/disc/announcement/annList?random=0.8015180112682705'

headers = {'Accept':'application/json, text/javascript, */*; q=0.01',

'Accept-Encoding':'gzip, deflate',

'Accept-Language':'en-US,en;q=0.9,zh-CN;q=0.8,zh;q=0.7',

'Connection':'keep-alive',

'Content-Length':'92',

'Content-Type':'application/json',

'DNT':'1',

'Host':'www.szse.cn',

'Origin':'http://www.szse.cn',

'Referer':'http://www.szse.cn/disclosure/listed/fixed/index.html',

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/83.0.4103.116 Safari/537.36',

'X-Request-Type':'ajax',

'X-Requested-With':'XMLHttpRequest'}

#payload,获取源代码

for firm_id in firm_ids:

dirname = path+f'{firm_id}'

os.mkdir(dirname)

for page in range(1,5):

try:

payload = {'seDate': date,

'stock': ["{firm_id}".format(firm_id=firm_id)],

'channelCode': ["fixed_disc"],

'pageSize': 30,

'pageNum': '{page}'.format(page=page)}

response = requests.post(url, headers=headers, data=json.dumps(payload))

doc = response.json()

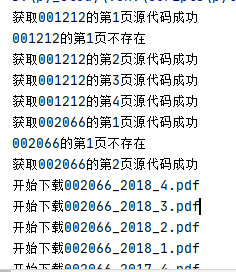

if response.status_code==200:

print('获取{0}的第{1}页源代码成功'.format(firm_id,page))

datas = doc.get('data')

for i in range(len(datas)):

data = datas[i]

pdf_url = 'http://disc.static.szse.cn/download'+data.get('attachPath')

title = data.get('title')

publish_time = data.get('publishTime')[:9]

filename = rename(title,firm_id)

if find_text(title,l):

continue

else:

downloadpdf(pdf_url, dirname+'/'+filename)

print(f'开始下载{filename}')

time.sleep(2)

except:

print('{0}的第{1}页不存在'.format(firm_id,page))

pass

import os#引用os库

import pdfplumber#引进pdfplumber库

#遍历文件夹的所有PDF文件

file_list=[]#新建一个空列表用于存放文件名

file_dir=r'D:\crawler_SZSE-main\not_metal'#遍历的文件夹

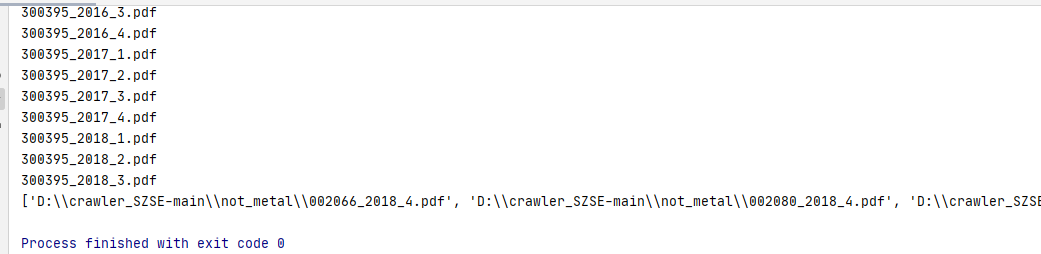

for files in os.walk(file_dir):#遍历指定文件夹及其下的所有子文件夹

for file in files[2]:#遍历每个文件夹里的所有文件,(files[2]:母文件夹和子文件夹下的所有文件信息,files[1]:子文件夹信息,files[0]:母文件夹信息)

print(file)

if os.path.splitext(file)[1]=='.PDF' or os.path.splitext(file)[1]=='.pdf':#检查文件后缀名,逻辑判断用==

# file_list.append(file)#筛选后的文件名为字符串,将得到的文件名放进去列表,方便以后调用

if file.endswith("2018_4.pdf"):

file_list.append(file_dir + '\\' + file) # 给文件名加入文件夹路径

print(file_list)

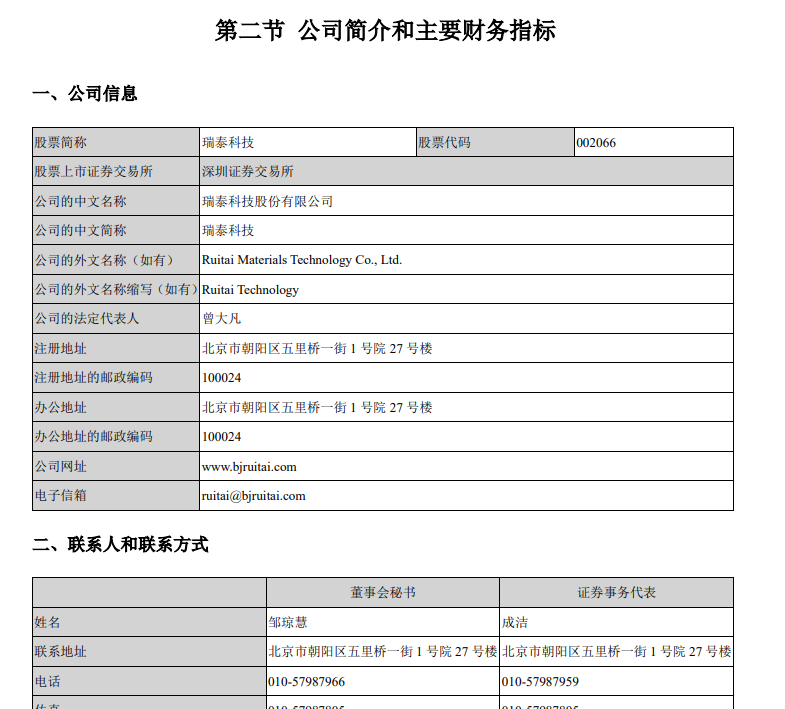

file_list = ['D:\\crawler_SZSE-main\\not_metal\\nMteal002066\\002066_2018_4.pdf', 'D:\\crawler_SZSE-main\\not_metal\\nMteal002080\\002080_2018_4.pdf',

'D:\\crawler_SZSE-main\\not_metal\\nMteal002088\\002088_2018_4.pdf', 'D:\\crawler_SZSE-main\\not_metal\\nMteal002162\\002162_2018_4.pdf',

'D:\\crawler_SZSE-main\\not_metal\\nMteal002205\\002205_2018_4.pdf', 'D:\\crawler_SZSE-main\\not_metal\\nMteal002225\\002225_2018_4.pdf',

'D:\\crawler_SZSE-main\\not_metal\\nMteal002233\\002233_2018_4.pdf', 'D:\\crawler_SZSE-main\\not_metal\\nMteal002271\\002271_2018_4.pdf']

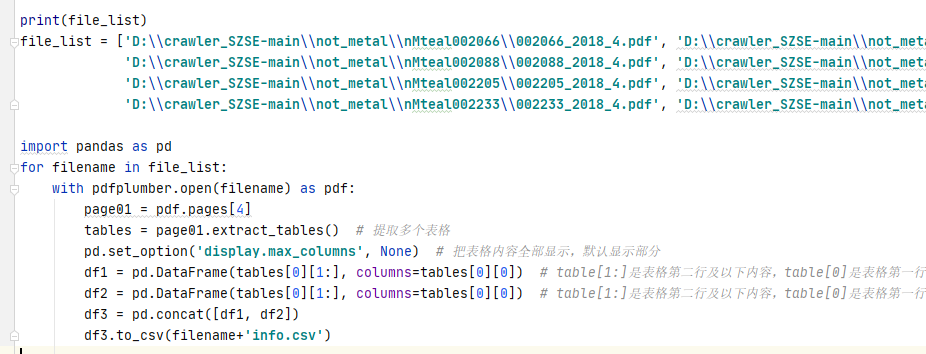

import pandas as pd

for filename in file_list:

with pdfplumber.open(filename) as pdf:

page01 = pdf.pages[4]

tables = page01.extract_tables() # 提取多个表格

pd.set_option('display.max_columns', None) # 把表格内容全部显示,默认显示部分

df1 = pd.DataFrame(tables[0][1:], columns=tables[0][0]) # table[1:]是表格第二行及以下内容,table[0]是表格第一行,及表头内容

df2 = pd.DataFrame(tables[0][1:], columns=tables[0][0]) # table[1:]是表格第二行及以下内容,table[0]是表格第一行,及表头内容

df3 = pd.concat([df1, df2])

df3.to_csv(filename+'info.csv')

import pdfplumber

import pandas as pd

from openpyxl import Workbook #保存表格,需要安装openpyxl

import os#引用os库

import pdfplumber#引进pdfplumber库

#遍历文件夹的所有PDF文件

file_list=[]#新建一个空列表用于存放文件名

file_dir=r'D:\crawler_SZSE-main\not_metal'#遍历的文件夹

for files in os.walk(file_dir):#遍历指定文件夹及其下的所有子文件夹

for file in files[2]:#遍历每个文件夹里的所有文件,(files[2]:母文件夹和子文件夹下的所有文件信息,files[1]:子文件夹信息,files[0]:母文件夹信息)

print(file)

if os.path.splitext(file)[1]=='.PDF' or os.path.splitext(file)[1]=='.pdf':#检查文件后缀名,逻辑判断用==

# file_list.append(file)#筛选后的文件名为字符串,将得到的文件名放进去列表,方便以后调用

if file.endswith("2018_4.pdf"):

file_list.append(file_dir + '\\' + file) # 给文件名加入文件夹路径

print(file_list)

file_list = ['D:\\crawler_SZSE-main\\not_metal\\nMteal002066\\002066_2018_4.pdf', 'D:\\crawler_SZSE-main\\not_metal\\nMteal002080\\002080_2018_4.pdf',

'D:\\crawler_SZSE-main\\not_metal\\nMteal002088\\002088_2018_4.pdf', 'D:\\crawler_SZSE-main\\not_metal\\nMteal002162\\002162_2018_4.pdf',

'D:\\crawler_SZSE-main\\not_metal\\nMteal002205\\002205_2018_4.pdf', 'D:\\crawler_SZSE-main\\not_metal\\nMteal002225\\002225_2018_4.pdf',

'D:\\crawler_SZSE-main\\not_metal\\nMteal002233\\002233_2018_4.pdf', 'D:\\crawler_SZSE-main\\not_metal\\nMteal002271\\002271_2018_4.pdf']

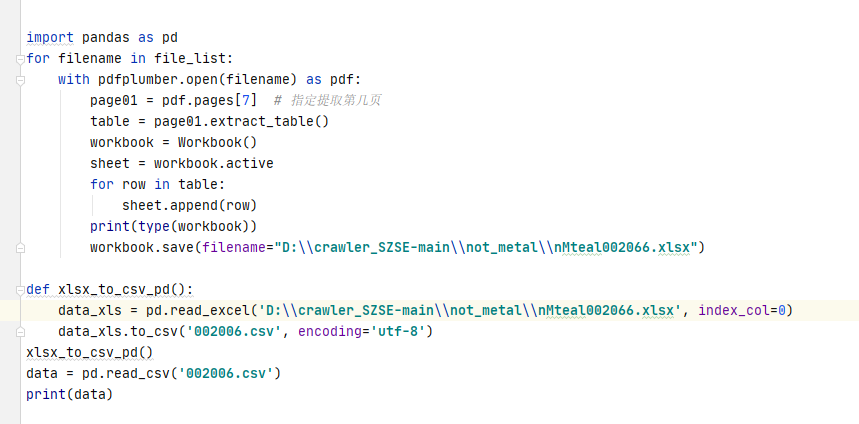

import pandas as pd

for filename in file_list:

with pdfplumber.open(filename) as pdf:

page01 = pdf.pages[7] # 指定提取第几页

table = page01.extract_table()

workbook = Workbook()

sheet = workbook.active

for row in table:

sheet.append(row)

print(type(workbook))

workbook.save(filename="D:\\crawler_SZSE-main\\not_metal\\nMteal002066.xlsx")

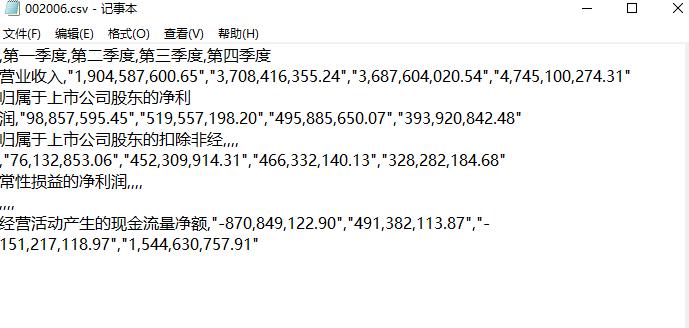

def xlsx_to_csv_pd():

data_xls = pd.read_excel('D:\\crawler_SZSE-main\\not_metal\\nMteal002066.xlsx', index_col=0)

data_xls.to_csv('002006.csv', encoding='utf-8')

xlsx_to_csv_pd()

data = pd.read_csv('002006.csv')

print(data)

(4)income_any.py

import matplotlib.pyplot as plt

import pandas as pd

plt.rcParams['font.sans-serif'] = ['SimHei'] # 用来正常显示中文标签

x = range(2009,2019)

data = pd.read_excel("income.xlsx")

print(data)

data = data.T

# data = data.rename(columns={0:"002066"})

print(data)

data.plot.bar()

data['2'].plot()

plt.title("十家公司十年的营收柱状图")

plt.show()